Project Name

Process Complex Data Structures Using Apache Spark

Overview

Our client belongs to the petroleum extraction industry and deals with vast volumes of complex structured data scattered across various file types. They needed a robust and efficient system to handle data from different sources like JSON, XML, CSV, and more.

Challenges

The client faced a significant challenge in managing a vast and diverse collection of structured data distributed across various file formats. The main obstacles they encountered were related to data normalization and extensive data refining, where they needed to detect and resolve anomalies at multiple levels within the large dataset. They wanted a resilient system that could handle this vast volume of structured, complex structured data from various sources. Additionally, the client required a solution that could efficiently and seamlessly load the processed data into a designated data source, while also implementing proper version control to facilitate future reference and track any changes made.

Key issues include:-

- Handling Complex Data Structures: The client's data was stored in various file formats, including JSON, XML, and CSV, each with intricate hierarchies. Normalizing this complex data posed a significant challenge.

- Detecting and Resolving Anomalies: The data contained anomalies at multiple levels, such as missing values, inconsistent data, and outliers. Detecting and resolving these anomalies required advanced data processing techniques.

- Efficient Data Loading and Version Control: The client needed a solution to load the processed data into a centralized data source on GCP efficiently. Moreover, they required the ability to maintain multiple versions of the data for future reference and analysis.

Our Solution

We utilized cutting-edge technology, Apache Spark, that can efficiently manage the substantial influx of data from various file types. Apache Spark is a top-tier big data framework known for its exceptional processing and transformation capabilities. By leveraging Spark’s in-built parallel processing capabilities, we could quickly handle and transform the massive amount of data. Our client required a versatile system capable of managing and transforming data from multiple file formats, including JSON, XML, CSV, and more. Spark’s built-in APIs excel at handling diverse file types, which makes it the most suitable solution for this complex task.

Further, the Spark SQL package enables us to run SQL queries effortlessly on the data frames.

This functionality significantly enhanced the process of spotting anomalies and improving data quality. It allowed us to execute complex formulas by using SQL queries customized to our client’s precise requirements. Additionally, Spark’s integrated APIs made it easy to load data from various sources and simplify the entire procedure.

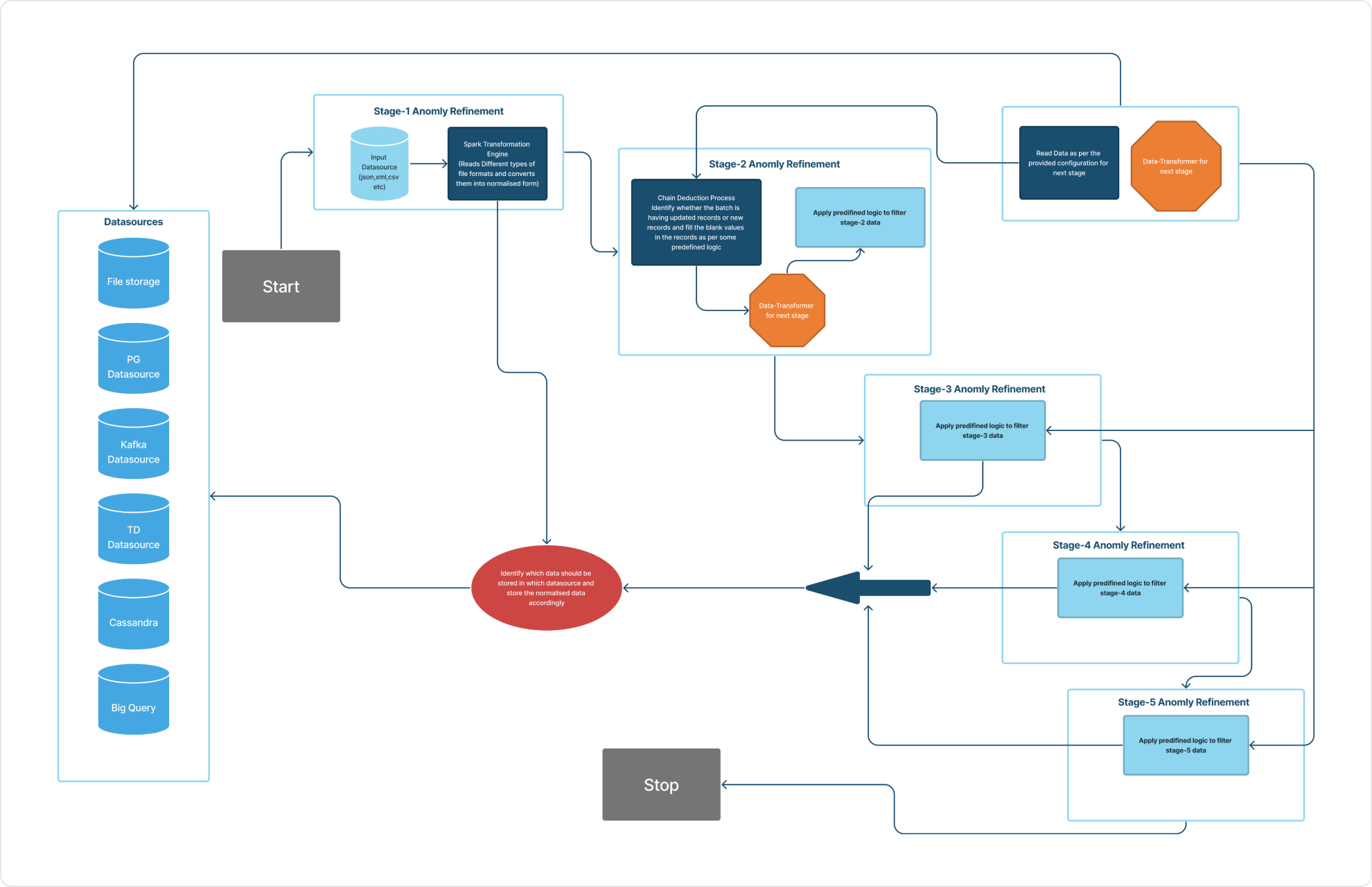

Data Flow Diagram

Conclusion

We have developed a highly efficient system by using Apache technology, which can handle large volumes of data from diverse file formats such as JSON, CSV, XML, etc. By harnessing the capabilities of Spark, we are able to smoothly standardize complex data structures through a wide array of transformations. Afterwards, we utilize a range of methods to identify anomalies and correct the data, making seamless use of the Spark SQL package. Ultimately, the processed data is directed to the assigned data source, bringing the data handling cycle to a refined conclusion.

Are you looking for professional Big Data Analytics

Consulting Services?

Are you looking for professional Big Data Analytics

Consulting Services?