Project Name

How Ksolves Transformed E-Commerce Data Management with Snowflake’s Scalable Architecture

![]()

Our client is a global retail leader with an extensive network of physical stores and e-commerce platforms, serving millions of customers worldwide. Operating across multiple countries, it generates massive volumes of data from transactions, product reviews, supply chain operations, and inventory systems on a daily basis. Data is scattered across S3, multiple databases, and CSV files, with continuous real-time streams alongside historical records.

The client approached us to design a robust, scalable, and centralized data infrastructure that unifies scattered data from S3, databases, and CSV files. The goal was to enable seamless real-time and batch processing, support advanced analytics and Machine Learning for personalized marketing, customer segmentation, loyalty programs, recommendations, and product analysis, while efficiently managing petabytes of structured and unstructured data.

The client was facing several challenges that included:-

- Scattered and Disconnected Data Sources: – Data scattered across S3, multiple databases, and CSV files created difficulties in consolidation, integration, and efficient analysis.

- Handling Massive Data Volumes: – With petabytes of structured and unstructured data generated daily, the client faced significant challenges in efficiently storing, managing, and processing data at scale.

- Real-Time and Batch Processing Challenges: The client generated both real-time and historical data, demanding a unified system capable of supporting streaming and batch processing to deliver timely, accurate, and actionable analytics.

- Customer Data Analysis Complexity:– The scale and diversity of data made it challenging to extract meaningful insights for personalized marketing, customer segmentation, loyalty programs, product recommendations, and performance analysis.

- Secure Data Sharing with Remote Teams: The client needed a secure mechanism to share large datasets with remote data science teams while ensuring strict access control, data integrity, and compliance with industry regulations.

- Implementing Machine Learning for Sentiment Analysis: Extracting sentiment insights from customer reviews required a robust infrastructure capable of handling natural language processing (NLP) and AI-driven analytics at scale.

- Scalability and Performance Optimization: With rapidly growing data volumes, the client required a highly scalable architecture capable of sustaining peak performance, eliminating bottlenecks, and delivering fast query and processing times.

Ksolves resolves the challenges by delivering the following solution:

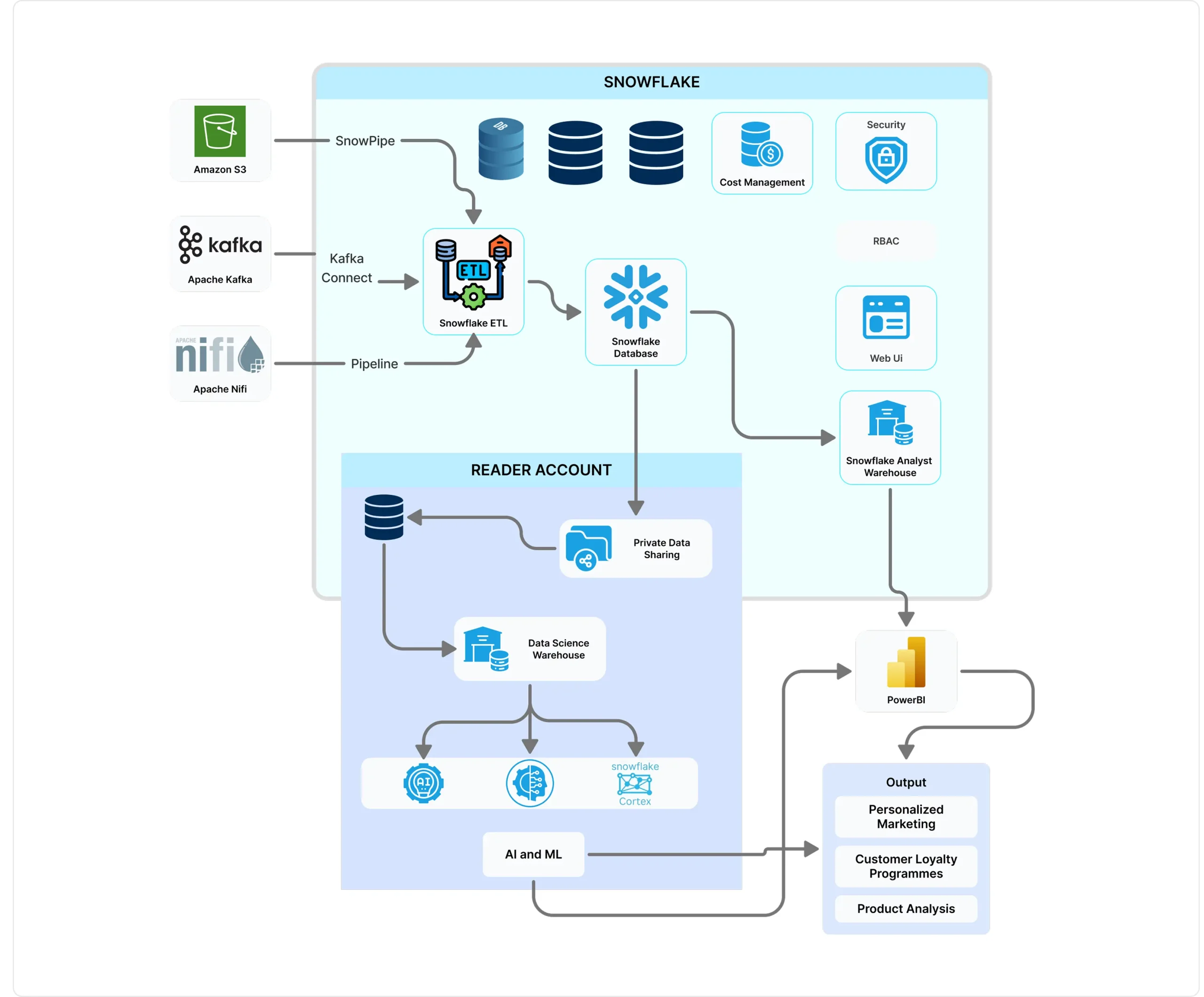

- Data Ingestion from Multiple Sources: The project involved ingesting data from multiple sources into Snowflake. Raw data from the client’s S3 storage buckets was loaded automatically and continuously using Snowpipe. External tables and stages allowed querying data directly from S3 without moving it, helping reduce storage costs. Real-time transactional and event data were streamed into Snowflake through Kafka Connect to ensure insights stayed up to date. Apache NiFi handled the ETL process by extracting data from various databases, transforming it as required, and loading it into Snowflake for structured storage.

- Centralized Data Storage in Snowflake: All real-time streams and batch-processed datasets are stored in Snowflake’s multi-cluster shared architecture, providing scalability and high performance. Snowflake’s automatic compression and micro-partitioning make it efficient to manage both structured and semi-structured data formats such as JSON, Avro, and Parquet. The data is organized logically into databases, schemas, and tables optimized for analytical workloads, while Time Travel and Fail-Safe features ensure historical data can be restored whenever needed.

- ETL and Data Processing in Snowflake: Data transformations and cleaning are handled directly within Snowflake using Snowpark and SQL-based transformations, minimizing the need for external ETL tools. Stored procedures and Snowflake Tasks automate the pipeline workflows, ensuring smooth and consistent data processing. The data is then structured and optimized to support analytical queries, customer segmentation, and machine learning applications.

- RBAC (Role-Based Access Control) Implementation: Fine-grained access control ensures that only authorized users can view specific datasets, with permissions assigned by roles such as Admin, Analyst, Data Engineer, and Data Scientist. Remote data science teams use Reader Accounts to securely access shared data without requiring full Snowflake accounts or duplicating datasets. Data security is further strengthened with column-level security, row access policies, and dynamic data masking to protect sensitive customer information.

- Secure Data Sharing & AI Capabilities: Snowflake Private Sharing allows seamless and secure sharing of live datasets with remote teams without duplicating data. Snowflake Cortex AI is used to perform sentiment analysis on customer reviews, extracting AI-driven insights from both structured and unstructured text. By using Snowpark ML, Snowflake Arctic, and Snowflake Cortex, remote teams can harness Snowflake’s compute resources for AI and ML workloads while ensuring robust data security.

- Data Analysis and Visualization: Business intelligence teams connect Power BI directly to Snowflake, enabling real-time data visualization and interactive dashboards. Snowflake’s query acceleration and result caching optimize performance while reducing costs, and the use of materialized views further enhances reporting speed and efficiency.

- Cost Management & Storage-Compute Separation: Snowflake’s storage-compute separation enabled independent scaling. Auto-scaling warehouses dynamically adjust compute power to meet demand while minimizing idle costs. Dedicated warehouses for different workloads ensured resource efficiency. Data compression, lifecycle management, and query cost monitoring optimized expenses.

- Get instant and historical insights in one place, empowering smarter customer engagement and better product decisions.

- Share live data securely with remote teams, without duplication or delays, using Snowflake Private Sharing.

- Unlock the voice of your customers with AI-powered sentiment analysis through Snowflake Cortex.

- Keep performance high and costs low by scaling storage and compute independently, only paying for what you use.

- Confidently handle massive volumes of data with a fast, reliable, and future-ready Snowflake infrastructure.

By adopting a robust Snowflake architecture, the client gained a unified, secure, and highly scalable data environment capable of managing petabyte-scale datasets in real time. The solution not only streamlined data ingestion, processing, and sharing but also enabled advanced analytics, AI-powered insights, and cost-efficient operations. This transformation empowered the business to enhance customer engagement, optimize operations, and sustain long-term growth in a competitive market.

Struggling with Performance Bottlenecks as Your Data Grows? Let Our Experts Help You.