Cloud to On-Premises Kubernetes: Top Reasons, Challenges, & Best Practices

Big Data

5 MIN READ

August 14, 2025

![]()

Kubernetes has become the backbone of modern application delivery, powering everything from agile startups to global enterprises. Its market is valued at USD 2.57 billion in 2025, with projections reaching USD 7.07 billion by 2030 (22.4% CAGR). For years, the public cloud has been the default environment of choice, offering rapid provisioning, elastic scalability, and managed control planes. Yet, the conversation is shifting.

Organizations with stable, high-volume workloads, strict data governance mandates, or specialized performance requirements are beginning to question whether the cloud still delivers optimal value. The emerging answer for many is a return to on-premises Kubernetes, where infrastructure control, predictable costs, and compliance alignment can outweigh the convenience of managed services.

In this blog, we will compare running Kubernetes on-premises vs in the cloud, explore the strategic and technical factors driving cloud-to-on-prem migrations, address the challenges, highlight proven best practices, and share how Ksolves helped a client execute this transition with measurable success.

Can Kubernetes Run On-Premises?

Absolutely. Kubernetes was built for portability, enabling it to run seamlessly across data centers, edge locations, private clouds, and public clouds. Deploying Kubernetes on-premises has become common in enterprises that require full infrastructure control or have strict compliance mandates.

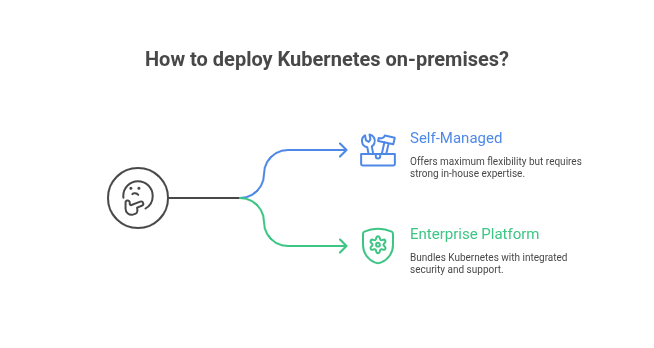

On-premises Kubernetes clusters can be:

- Self-managed using open-source tooling like kubeadm, k0s, or k3s. This offers maximum flexibility but requires strong in-house expertise.

- Enterprise platform distributions such as Red Hat OpenShift, VMware Tanzu, or Rancher/SUSE. They bundle Kubernetes with integrated security, lifecycle management, and vendor support.

Each approach involves trade-offs between control, operational overhead, feature maturity, and support models. As a result, the choice is highly dependent on organizational priorities and resources.

Cloud vs On-Premises Kubernetes

1. Cost Model

- Cloud: Cloud Kubernetes runs on a pay-as-you-go (OpEx) model. You pay for the compute nodes, managed services, and data transfer as you use them. This makes it easy to scale up quickly when demand spikes. However, if you don’t actively manage resource usage, your cloud bills can grow unexpectedly due to idle resources or high network egress costs.

- On-Prem: With on-premises Kubernetes, you invest upfront in hardware and infrastructure (CapEx), along with ongoing costs like electricity, cooling, and maintenance. While this requires careful capacity planning, it offers more predictable expenses over time. Organizations with stable workloads often find on-premises costs easier to forecast and control.

2. Control & Customization

- Cloud: Cloud providers take care of running and maintaining the Kubernetes control plane, which means less operational burden for your team. However, this also means you have limited access to fine-grained system configurations like kernel tuning or custom networking setups.

- On-Prem: On-premises Kubernetes gives you full control over your entire environment, from hardware choices to network configurations and storage options. This level of customization is important for workloads that require specialized hardware, custom kernel modules, or fine-tuned performance optimizations.

3. Compliance & Data Sovereignty

- Cloud: While cloud providers offer compliance certifications and data protection features, meeting strict data residency or regulatory requirements can be complex. Some industries require data to remain physically within specific locations, which isn’t always guaranteed in the cloud.

- On-Prem: On-premises Kubernetes deployments shine when compliance is non-negotiable. Keeping your Kubernetes clusters inside your own data centers makes it easier to comply with data residency laws and regulatory audits, since you control physical access and data flow.

4. Latency & Throughput

- Cloud: For most applications, cloud infrastructure provides excellent performance. But workloads sensitive to latency or requiring high bandwidth between components might suffer due to network delays or data transfer fees across regions.

- On-Prem: On-premises Kubernetes is often preferred for ultra-low latency applications like telecom, financial trading, or AI workloads. Running compute and storage close together reduces network latency and eliminates cross-region data transfer charges.

5. Scalability & Elasticity

- Cloud: Cloud platforms offer near-instant, elastic scaling. You can increase or decrease your Kubernetes cluster capacity on demand, paying only for what you use – a big advantage for handling unpredictable workloads or seasonal spikes.

- On-Prem: Scaling on-premises requires planning and procurement cycles since you must buy and install additional hardware. While this means scaling is slower and less flexible, some organizations use hybrid models to burst into the cloud during peak demand.

Why Move from Cloud to On-Premises Kubernetes?

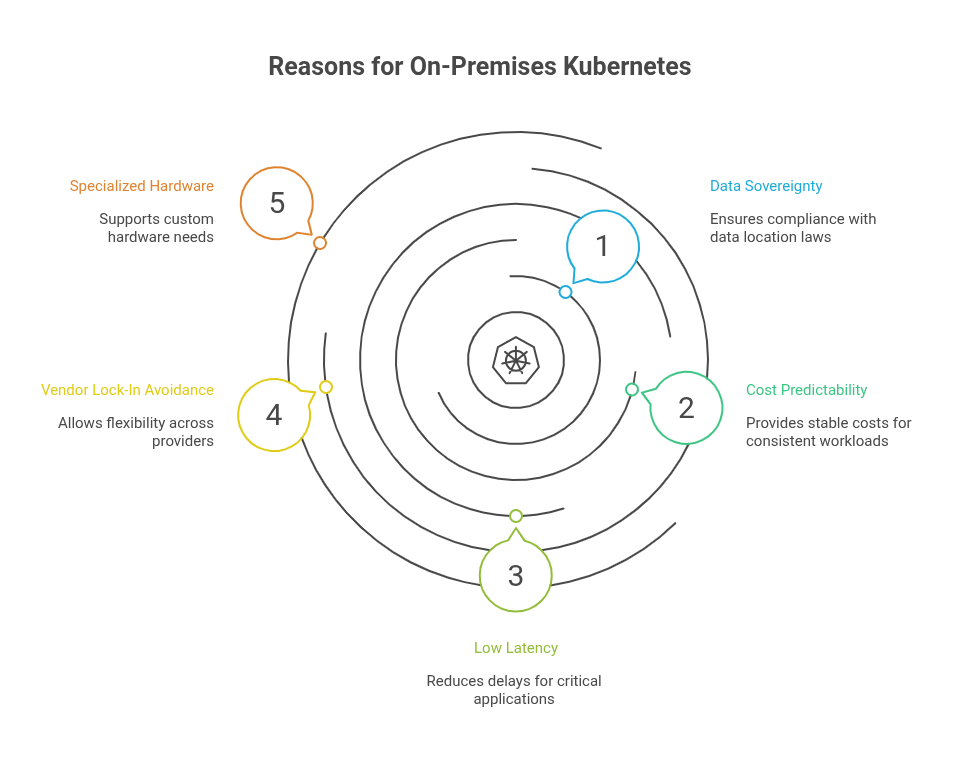

While the public cloud is great for flexibility and quick scaling, there are important reasons why some businesses choose to run Kubernetes on their own infrastructure instead:

- Rules About Where Data Must Stay

Certain industries like finance, healthcare, and government have strict laws about where data can be stored. Running Kubernetes on-premises means you have full control over your data’s location and can easily meet these legal requirements.

- Predictable Costs for Steady Workloads

If your applications run all the time without big changes in demand, paying monthly cloud bills can add up quickly. Owning your own hardware helps you control costs better, avoiding surprise charges like data transfer fees.

- Speed and Low Delay Are Critical

For use cases like stock trading, telecom, or AI, even tiny delays can cause big problems. Running Kubernetes close to your compute and storage resources on-premises reduces network delays and improves performance.

- Avoiding Vendor Lock-In

Some companies want to avoid being tied to one cloud provider. By running Kubernetes on-premises, they keep control and can easily move workloads between private data centers and multiple clouds if needed.

- Need for Specialized Hardware

Not all workloads fit neatly into cloud provider offerings. If you need special hardware like powerful GPUs for machine learning or high-speed network cards, running Kubernetes on-premises lets you customize your setup exactly how you want it.

Challenges of Running Kubernetes On-Premises

While Kubernetes on-premises offers control and customization, enterprises frequently encounter tangible obstacles during deployment and operations:

1. Complex Hardware Management and Procurement Delays

Organizations often face long lead times for ordering, configuring, and deploying physical servers. Firmware mismatches or outdated BIOS versions can cause subtle compatibility issues that delay cluster rollout or cause instability.

2. Networking Bottlenecks and Misconfigurations

Many teams struggle with designing network topologies that accommodate Kubernetes’ east-west traffic patterns. Without proper load balancing, DNS, and CNI configurations, applications suffer from latency spikes, packet loss, or security vulnerabilities.

3. Storage Inconsistencies and Performance Issues

Integrating persistent storage is often complicated by incompatible CSI drivers or legacy SAN/NAS infrastructure. Slow or inconsistent I/O can severely impact stateful workloads like databases or message queues, leading to degraded application performance.

4. Securing the Cluster is Harder Than Expected

Misconfigured RBAC policies or unsecured etcd access have led to critical security breaches in production. Secrets management and image vulnerability scanning are often overlooked or poorly implemented due to a lack of tooling or expertise.

5. Monitoring Gaps and Incident Response Delays

Without cloud-native observability tools, teams frequently rely on fragmented, DIY monitoring stacks. This leads to slow detection of outages or degraded performance, impacting SLAs and user experience.

6. Upgrade Failures Causing Downtime

In many cases, manual upgrades have resulted in cluster downtime or workload failures due to incompatible Kubernetes versions, untested custom components, or operator errors, putting production reliability at risk.

Best Practices for Kubernetes On-Premises

- Treat Infrastructure as Code- Use tools like Terraform, Ansible, or Helm to define and manage your Kubernetes clusters. This makes your setup repeatable, consistent, and easy to update or recreate when needed.

- Choose the Right Kubernetes Distribution- If you want simplicity and control, go for self-managed options like kubeadm or k3s. For enterprise needs with vendor support and extra features, consider platforms like OpenShift, Rancher, or VMware Tanzu.

- Standardize Networking and Security- Select a network solution (CNI) that matches your company’s security policies. Implement network rules to control traffic between pods, enforce security standards, and set strong access permissions for users and services.

- Plan Your Storage Strategy- Use reliable storage drivers that work well with your hardware and Kubernetes. Make sure you have backup and snapshot systems in place to protect your data and allow quick recovery.

- Monitor from the Start- Set up centralized logging, metrics collection, and tracing early on. This helps you detect issues quickly and understand your cluster’s health and performance.

- Automate Upgrades and Policy Enforcement- Create automated processes to update Kubernetes control planes and worker nodes gradually. Test upgrades carefully before applying them widely, especially on critical workloads.

- Harden Your Cluster Security- Scan container images for vulnerabilities, use image signing, enable security features like admission controllers, encrypt sensitive data stored in etcd, and segment your network to limit access.

- Plan Capacity and Track Costs – Since on-prem resources are limited, use quotas and autoscaling to manage workloads efficiently. Keep track of resource usage to optimize costs and avoid running out of capacity unexpectedly.

How Ksolves Helped a Client Move from Cloud to On-Premises Kubernetes

One of our recent clients was facing significant challenges with their managed Kubernetes environment in the cloud. They struggled with unpredictable monthly bills, forced upgrades they didn’t control, and a lack of flexibility over their infrastructure.

To address these issues, we designed and migrated their Kubernetes workloads to a fully on-premises solution tailored specifically to their business needs.

Our approach included:

- Deploying a robust stack built with Helm for easy management, Longhorn for reliable storage, Istio for advanced service mesh capabilities, MetalLB for load balancing, and cert-manager to handle TLS certificates automatically.

- Implementing comprehensive monitoring using Prometheus, Grafana, and OpenSearch to provide deep visibility into cluster health and performance.

- Enabling auto-scaling with Kubernetes’ Horizontal Pod Autoscaler (HPA) to ensure stable performance while optimizing resource use.

The results were transformative:

- Infrastructure costs dropped by 50%, thanks to predictable hardware investments and the elimination of surprise cloud bills.

- The client gained full control over scaling and upgrades, freeing them from cloud provider constraints.

They made a one-time investment in hardware, replacing the variable operational expenses of cloud billing with stable, forecastable costs.

Conclusion

On-premises Kubernetes offers significant advantages in control, compliance, cost predictability, and performance optimization, especially for organizations with specialized workloads or strict regulatory requirements. However, its successful adoption depends on thorough planning, robust infrastructure, and skilled operational practices to manage complexity and ensure reliability.

If you are considering migrating from cloud to on-premises Kubernetes or want expert guidance to optimize your Kubernetes environment, connect with the Ksolves team. We provide tailored solutions designed to meet your unique business challenges and deliver measurable value.

AUTHOR

Big Data

Anil Kushwaha, Technology Head at Ksolves, is an expert in Big Data. With over 11 years at Ksolves, he has been pivotal in driving innovative, high-volume data solutions with technologies like Nifi, Cassandra, Spark, Hadoop, etc. Passionate about advancing tech, he ensures smooth data warehousing for client success through tailored, cutting-edge strategies.

Share with