Apache NiFi Guide

Summary

This guide covers Apache NiFi’s core concepts, architecture, processors, and key features. It highlights NiFi’s benefits for data management, industry use, compliance, and tool comparisons, along with expert support from Ksolves.

Introduction

What is NiFi?

Apache NiFi is a robust, open-source platform designed for automating the movement of data between different systems. Originally developed by the NSA and later contributed to the Apache Software Foundation, NiFi is built on the foundation of NiagaraFiles technology. It enables seamless and real-time data ingestion, transformation, and routing across various systems.

What makes NiFi especially powerful is its ability to handle a wide range of data formats, from logs and geolocation information to social media feeds, and support numerous protocols such as SFTP, HDFS, and Kafka. This flexibility makes it a go-to solution for building dynamic, scalable, and secure data pipelines in real-time environments. Why is Apache NiFi the best choice? for your business.

Apache NiFi – Core Concepts You Should Know

To get the most out of Apache NiFi, it’s important to understand its fundamental building blocks. These core concepts form the backbone of how data moves, transforms, and is monitored across the platform.

1. Process Group

A Process Group is like a folder or container that holds multiple related data flows. It helps organize complex workflows into manageable, hierarchical units — ideal for modular design, better visibility, and reusability.

2. Data Flow

A Flow in NiFi is a pathway that connects various components like processors and queues. It defines how data moves from one system to another and what transformations happen along the way. Think of it as your custom pipeline for routing and shaping data.

3. Processor

Processors are the workhorses of NiFi. Each processor performs a specific task — fetching data from a source, modifying its content or attributes, and then pushing it to the next stage. Processors are highly configurable and cover a wide range of data operations.

4. FlowFile

A FlowFile is a container for your data in NiFi. It holds the actual content along with metadata in the form of attributes. As FlowFiles pass through the system, processors perform actions like Create, Clone, Route, or Transform, based on business logic.

5. Event

Every interaction with a FlowFile is recorded as an Event. These events capture what happened — such as data being received, split, merged, or sent- and provide a trail of how the data was handled through the system.

6. FlowFile and FlowFile Repository

Data Provenance is NiFi’s built-in tracking system. It logs the history of each FlowFile, including every processor it passed through and every action performed. With its user-friendly UI, it allows you to trace data flow, diagnose problems, and ensure data integrity.

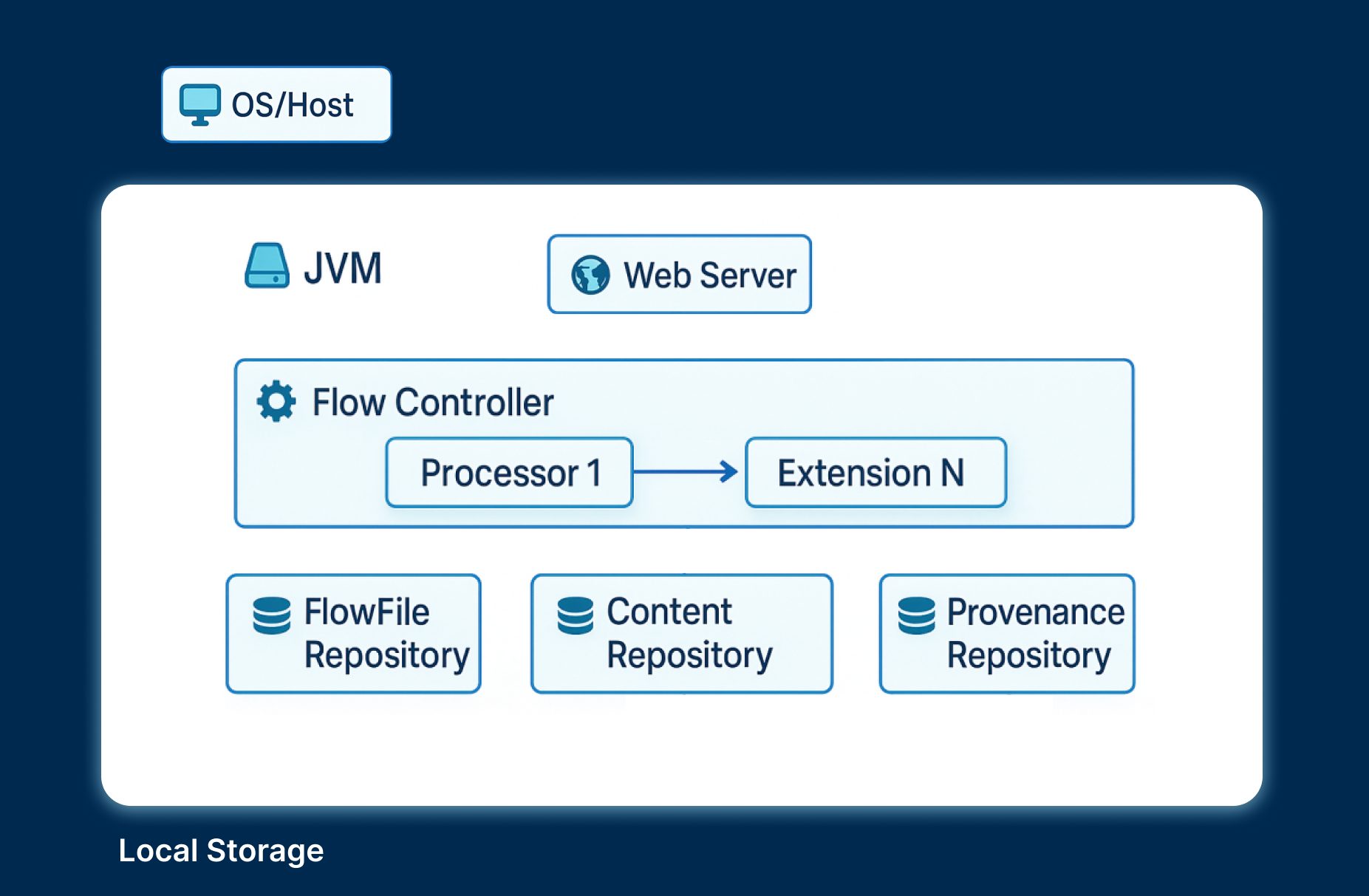

Apache NiFi Architecture

Apache NiFi’s architecture is designed to support the seamless and secure movement of data across diverse systems, all while being scalable, fault-tolerant, and easy to manage. Let’s break down the key components that power this flow-based programming model.

1. Web Server

At the heart of NiFi is a web-based user interface (UI) that enables users to design, monitor, and manage data flows in real-time. This UI is hosted on an embedded web server, which also exposes RESTful APIs to support automation and integration with external systems.

2. Flow Controller

The Flow Controller acts as the central brain of NiFi. It manages the overall flow execution, component scheduling, and resource allocation. It’s responsible for initializing processors, tracking system health, and coordinating all activities across the platform.

3. Extensions and Processors

NiFi is modular and extensible. Processors are pluggable components that handle specific data tasks, like reading from a database, transforming a JSON file, or publishing to Kafka. Developers can also build custom extensions to support unique business needs.

4. FlowFile and FlowFile Repository

A FlowFile represents a unit of data in transit, including content and associated metadata (attributes). The FlowFile Repository maintains the current state and tracking information of every FlowFile. If NiFi crashes, it ensures FlowFiles can be recovered and continue processing from where they left off.

5. Content Repository

The Content Repository is where the actual data (content of FlowFiles) is stored while being processed. It is disk-based and optimized for high throughput, allowing NiFi to scale efficiently and maintain persistence.

6. Provenance Repository

The Provenance Repository stores historical data about each FlowFile’s journey. It logs every event, when it was created, modified, routed, dropped, or transmitted. This enables data lineage tracking, auditability, and powerful troubleshooting capabilities.

7. Site-to-Site Protocol

NiFi’s Site-to-Site (S2S) protocol allows multiple NiFi instances or clusters to communicate securely and efficiently. This is useful for distributed data pipelines, where data may flow from edge devices to central clusters or between environments.

8. Cluster Coordinator (in Clustered Mode)

In a NiFi cluster, one node acts as the Cluster Coordinator, which manages heartbeats and node membership. All nodes are identical in terms of functionality, and the coordinator ensures synchronization and workload distribution across the cluster.

9. Security Layer

NiFi integrates security at multiple levels:

- Transport Layer Security (TLS) for secure data transmission

- Authentication & Authorization for user-level access

- Encrypted repositories for protecting sensitive information at rest

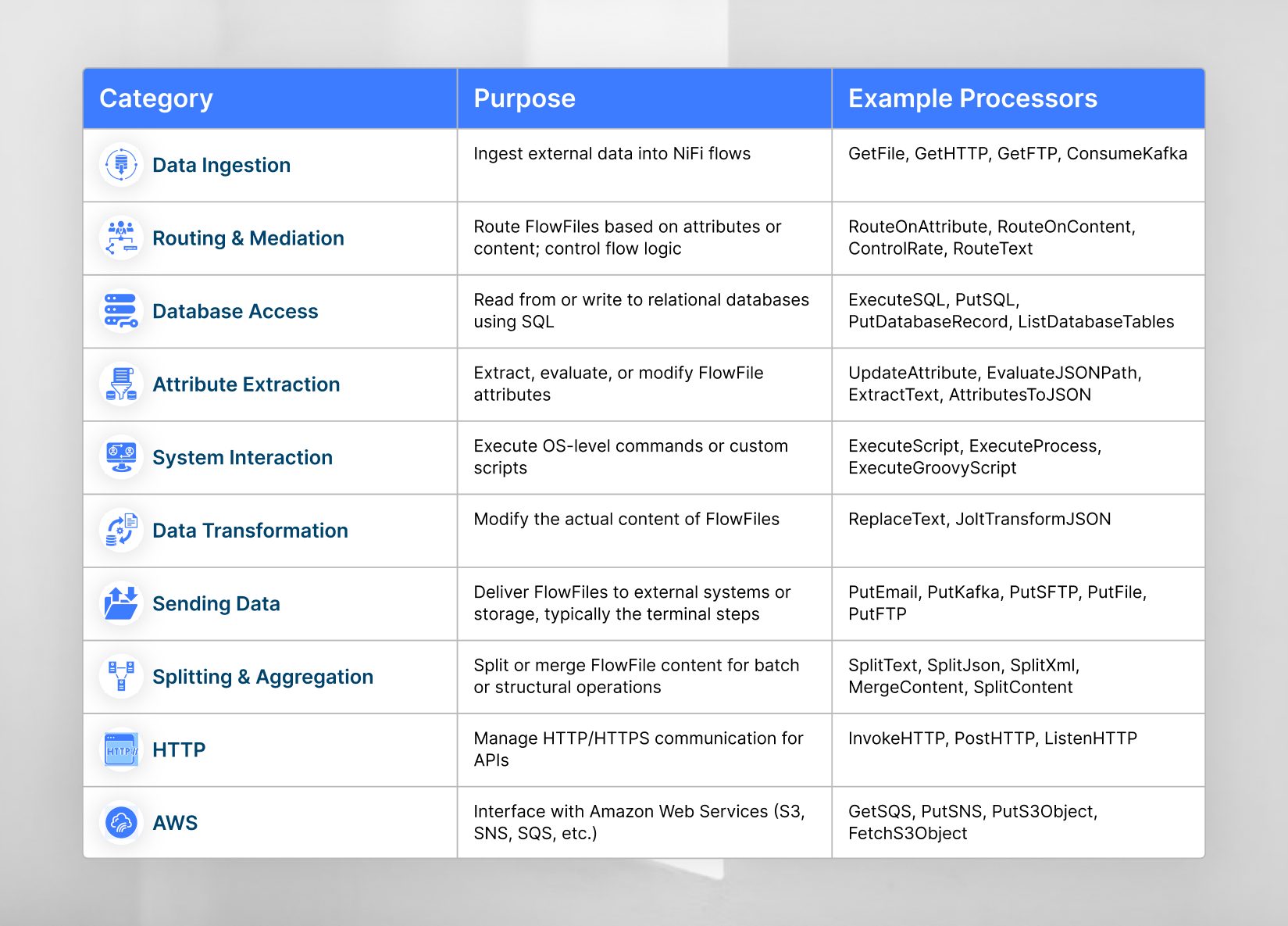

Understanding NiFi Processors & Their Categories

NiFi processors are the foundational elements of every dataflow—they perform tasks like data ingestion, transformation, routing, enrichment, and delivery. Each processor processes FlowFiles and routes them along one or more Relationships, enabling complex routing logic.

How Processors Work

Every processor:

- Accepts data through incoming connections

- Processes the data based on configured properties

- Sends the result through outgoing relationships

For example, a processor like GetFile pulls files from a local directory, while PutS3Object sends data to Amazon S3.

Processor Categories in NiFi

To improve usability, NiFi groups processors into categories based on their functionality. Here’s a breakdown of the most common categories:

NiFi Processor Categorization Table

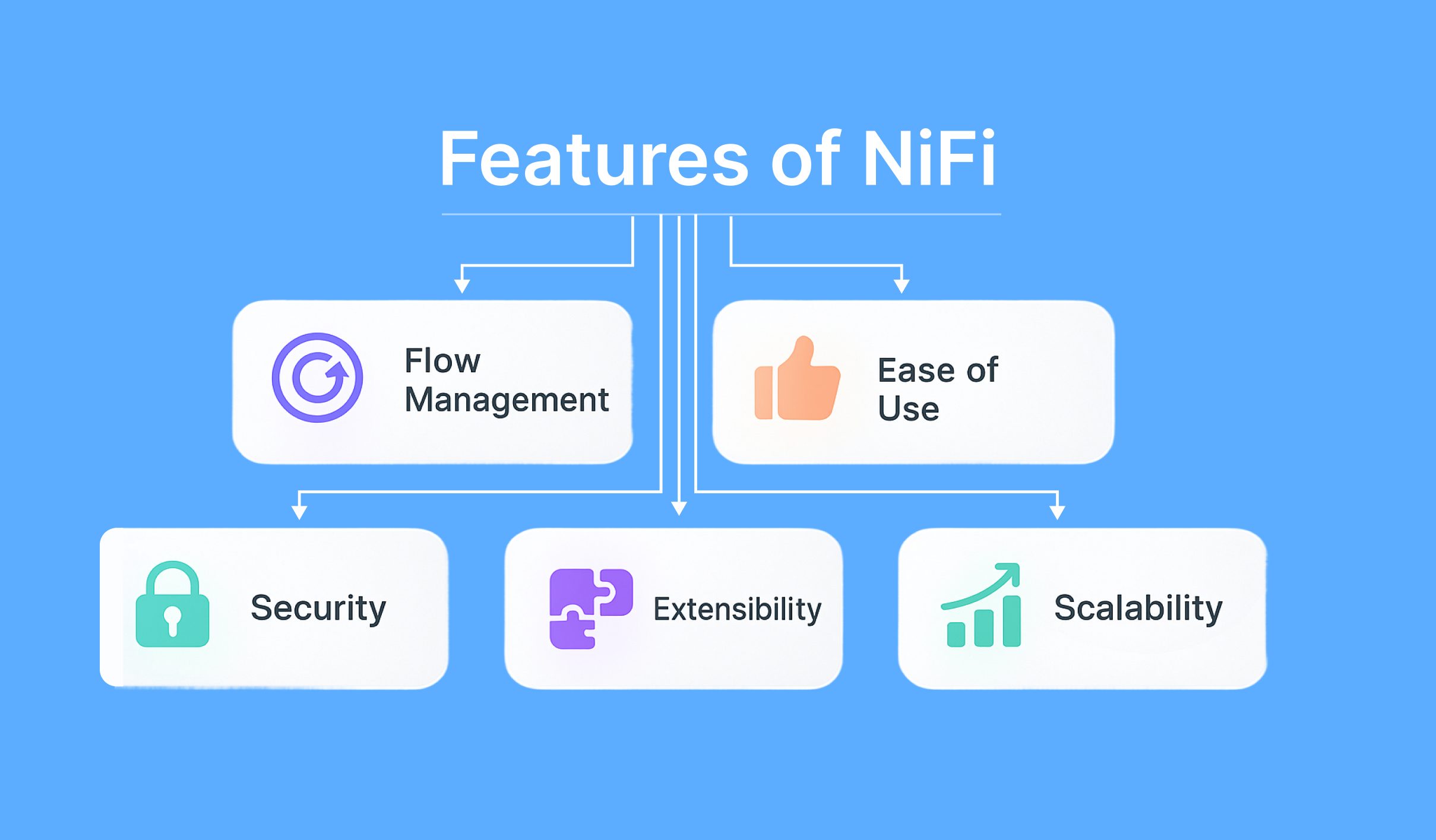

Key Features of NiFi

Here are the key NiFi features

Features of NiFi

- Flow Management

- Ease of Use

- Security

- Extensibility

- Scalability

Flow Management

- Guaranteed Delivery: Ensures reliable data flow using persistent storage and write-ahead logs.

- Backpressure & Buffering: Prevents overload by pausing or aging out data when queues are full.

- Prioritized Queuing: Supports oldest-first, newest-first, or custom data processing priorities.

- Flow-Specific QoS: Allows tuning for low-latency or high-throughput depending on data criticality.

Ease of Use

- Visual Flow Builder: Real-time drag-and-drop UI to design, modify, and monitor data flows.

- Flow Templates: Reuse and share pre-built flow designs across teams for consistency.

- Data Provenance: Tracks full data lineage for audit, compliance, and debugging.

- Rolling History & Replay: Enables viewing and replaying past data for troubleshooting.

Security

- Encrypted Transfers: Uses 2-way SSL for secure data exchange between systems.

- Role-Based Access Control: Granular user permissions down to the component level.

- Multi-Tenant Access: Supports multiple teams with isolated, secure flow management.

- Secure Credentials: Sensitive inputs like passwords are encrypted and hidden from the UI.

Extensibility

- Pluggable Extensions: Add custom processors, services, and reporting tools.

- Classloader Isolation: Prevents library conflicts between different extensions.

Site-to-Site Protocol: Securely connects NiFi instances or external apps with ease.

Scalability

- Cluster Deployment: Scale horizontally with multi-node clusters for big data workloads.

- Edge-Friendly: Runs on low-resource devices for IoT and edge processing.

- Parallel Execution: Boost performance with concurrent task configurations.

- Built-in Load Balancing: Manages failover and throughput across nodes automatically.

Key Benefits of Apache NiFi

- Real-Time Data Flow: Enables instant data movement between sources and systems.

- Low-Code Platform: Design complex workflows visually with minimal coding effort.

- End-to-End Visibility: Offers full data lineage and tracking with built-in provenance.

- Reliable & Resilient: Ensures guaranteed delivery with built-in failover and replay.

- Highly Scalable: Easily scales from edge devices to enterprise-level clusters.

- Protocol & Format Agnostic: Supports various formats (JSON, XML, CSV) and protocols (SFTP, Kafka, HTTP).

- Flexible Integration: Connects with legacy, cloud, IoT, and modern data systems.

- Secure by Design: Built-in encryption, access control, and sensitive data protection.

- Easy Reusability: Templates and versioning simplify flow replication and reuse.

Multi-Tenant Support: Supports multiple teams or business units securely in one platform. - Extensible Framework: Customize with custom processors and third-party integrations.

- Operational Efficiency: Reduce manual handoffs and data engineering bottlenecks.

Apache NiFi Benefits For Different Industries

Apache NiFi supports diverse industry needs from real-time fraud detection in finance, to secure patient data integration in healthcare, to IoT sensor data processing in manufacturing. Its flexibility, scalability, and built-in security make it a smart choice for modern data workflows across sectors. Learn how NiFi is benefiting diverse industries.

Apache NiFi Benefits For Data Management

Apache NiFi simplifies and strengthens data management with its powerful flow-based approach. It enables seamless data ingestion from diverse sources, real-time transformation, and routing with full traceability. With features like data provenance, back-pressure control, fine-grained prioritization, and visual flow design, teams gain better control, observability, and reliability in their pipelines. NiFi also supports secure, scalable, and repeatable workflows, making it ideal for managing both batch and streaming data across complex ecosystems. Understand the benefits of NiFi in data management.

Overcoming Big Data Cluster Management Challenges

Managing Big Data clusters can quickly become overwhelming as data volume, velocity, and variety grow. From ensuring system reliability to handling real-time processing and scaling infrastructure, organizations often face performance bottlenecks, security concerns, and high operational overhead. With the right architecture, monitoring tools, and automation strategies, you can gain control over your cluster environment, improve system health, and streamline operations — all while reducing downtime and costs.

Handling Failures & Recovery in NiFi Data Ingestion

Apache NiFi simplifies data ingestion, but failures like unreachable destinations or schema mismatches can still occur. Traditional recovery uses UpdateAttribute and RouteOnAttribute for retries or DLQ routing. With NiFi 1.10, the new RetryFlowFile processor streamlines this by handling retries with configurable delays, reducing complexity and improving fault tolerance. Read more about Data Ingestion Using NiFi: Failure And Recovery

What is NiFi Upgrade?

An Apache NiFi upgrade refers to the process of moving from an older version of NiFi to a newer one. This is done to take advantage of new features, bug fixes, performance improvements, and security patches that come with updated releases.

Upgrading NiFi can involve:

- Backing up existing flow configurations and data

- Stopping the current NiFi instance

- Installing the new version (usually in a separate directory)

- Migrating configurations, custom processors, and templates

- Validating the compatibility of existing flows

- Restarting the upgraded instance and testing thoroughly

Know more about NiFi 2.0 Upgrade, New Features, Migration Challenges & How to Navigate Them

Why Compliance Matters in Apache NiFi Environments?

Apache NiFi plays a crucial role in helping organizations enforce compliance and regulatory controls by providing robust features such as:

- Encrypted data in transit and at rest: NiFi supports TLS/SSL and encrypted content repositories, ensuring sensitive information is protected during transfer and storage.

- Fine-grained authentication and authorization: Seamless integration with LDAP, Kerberos, OIDC, client certificates, and support for role-based and multi-tenant access controls.

- Detailed data provenance & lineage tracking: Every FlowFile action is logged, enabling full traceability (who, what, when, where) — critical for GDPR, HIPAA, SOC 2, etc.

- Secure parameterization: Sensitive variables (e.g., API keys, passwords) are encrypted within parameter contexts to prevent leaks.

- Policy-driven access with audit trails: Centralized enforcement via Apache Ranger or NiFi policies, along with immutable audit logs of all configuration changes and deployments.

Why It Matters For Your Organization?

Apache NiFi Vs Other Tools

Ksolves: Hire a Trusted NiFi Partner For Your Project

Looking for a reliable partner to streamline your data flow architecture? Ksolves is a trusted Apache NiFi development company with proven expertise in building scalable, secure, and real-time data integration solutions. From designing custom processors to end-to-end NiFi implementation, our certified developers ensure seamless data movement and governance across systems. Whether you are modernizing legacy pipelines or enabling real-time analytics, Ksolves delivers NiFi solutions tailored to your business needs, on time and within budget. We also offer comprehensive Apache NiFi consulting and support services, helping you optimize performance, ensure compliance, and scale with confidence.

Conclusion

Apache NiFi is a versatile, open-source platform that simplifies building secure, scalable, and real-time data pipelines. With its low-code, drag-and-drop interface, it enables seamless integration across diverse systems, supports multiple data formats and protocols, and ensures complete visibility through data provenance. From IoT and finance to healthcare and big data analytics, NiFi adapts to varied industry needs.

Partnering with Ksolves ensures you maximize NiFi’s capabilities by optimizing its performance, enhancing compliance, and enabling effortless scalability. Together, we can transform complex data workflows into efficient, actionable insights that drive faster, smarter decision-making and give your business a competitive edge in today’s data-driven world. Ready to streamline your data flows with Apache NiFi? Contact Ksolves today at sales@ksolves.com