Apache Spark Benefits: Reasons Why Enterprises are Moving To this Data Engineering Tool

Spark

5 MIN READ

May 23, 2022

![]()

Apache Spark is an open-source framework for big data which comes with various benefits such as real-time stream processing, fault tolerance, provision of reusability, and many more. Since its inception, it has exceeded enterprise expectations in terms of data processing, querying, and generating quick and better analytic reports. It has gained huge popularity as a big data processing framework and is extensively used by different industries and businesses that are dealing with large volumes of data. Internet substations like eBay, Netflix, Yahoo and more are extensively using Apache Spark.

If we look at the data of Apache Spark customers by industry, then we can see it has been used in the largest segments by customer software (30%) and Information Technology and Services (15%). Today, it has been rapidly adopted across many industries, including financial services, hospitals and healthcare, retailers, and many more.

Why choose Apache Spark?

Apache Spark is a leading open-source data processing engine used for batch processing, machine learning, stream processing, and large scale SQL. It has been designed to make big data processing quicker and easier. With Apache Spark, developers get the freedom to write code in different languages like Scala, Java, Python, and more as per their convenience. You can utilize it to develop application libraries or perform analytics on big data.

Apache Spark offers the following libraries for supporting multiple functionalities:-

- MLlib: This library offers machine learning capabilities.

- GraphX– It is used for Graph processing and creation.

- Spark SQL-with this library, you can perform SQL operations on data.

- Spark Stream:- It provides real-time streaming data processing.

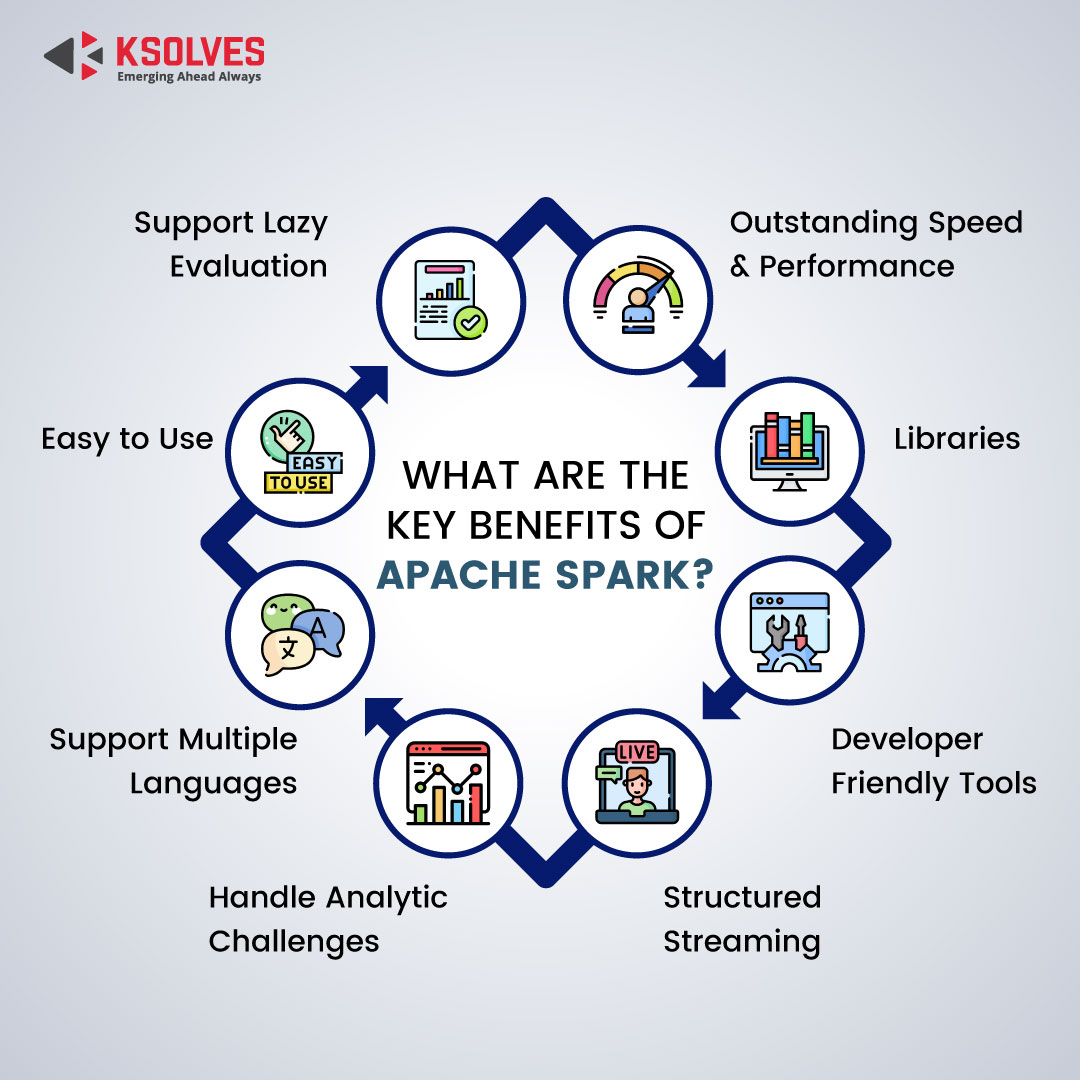

What are the key benefits of Apache Spark?

Apache Spark has revamped the arena of Big Data. It has come up as the most efficient and active big data tool that plays a vital role in reshaping the big data market. It is an open-source distributed computing platform containing exceptionally powerful benefits as compared to other available solutions. The key advantages of Apache Spark make it an efficient choice for a big data framework. Let’s have a look at some common Apache Spark benefits that promote companies to move into this data engineering tool.

Outstanding speed and performance

In Big Data, speed and processing are two of the most important components. Apache Spark has grabbed huge popularity among data scientists because of its high speed. When it comes to large scale data processing, Apache Spark speed is 100 times faster as compared to Hadoop. It has the great ability to manage multiple petabytes of clustered data from over 8000 nodes at a time.

Developer-Friendly Tools

Apache Spark is not only beneficial for organizations but for developers as well. It provides developer-friendly tools that allow developers to deal with complex distribution through a simple method call. They don’t need to waste time going through all the levels of detail. Apache Spark also provides language binding for R, Python, Scala, and Java. This will allow data scientists and application developers to harness the power of scalable tools and dependable performance without having to dig through a lot of details.

Libraries

Apache Spark is known for its outstanding libraries that support machine learning and graph analysis. It contains the machine learning framework to support feature extraction, selection of structured data sets, and transformations. Also, Apache Spark includes libraries for SQL and structured data (Spark SQL), Graph Analytics (GraphX), and Stream Processing (Spark Streaming and New Structured Streaming).

Structured Streaming

This is one of the key reasons companies are choosing Apache Spark. The streaming market is exploding and Apache Spark offers outstanding streaming with the latest methods for writing and maintaining streaming code. Many businesses use Spark Streaming for a variety of applications such as stream mining, network optimization, real-time scoring of analytic models, and more.

Handle Analytic Challenges

Apache Spark is a very powerful tool that comes with the ability to manage various analytics challenges. It has low-latency in-memory data processing ability that allows it to handle all types of analytics obstacles. It also contains well-designed libraries for machine learning and graph analytics algorithms.

Easy to use

Apache Spark contains easy-to-use APIs to operate on large sets of data. It provides more than 80 high-level operators that allow you to build parallel applications with ease.

Support for multiple languages

Apache Spark supports multiple languages for writing code, such as Python, Java, R, and Scala. It offers a dynamicity and eliminates the problem of Hadoop, in which applications can be built only in Java.

Support for Lazy Evaluation

Apache Spark supports lazy evaluation. It means, Apache Spark will wait for the entire instruction before processing it. For example, if a user wants data filtered by date but only with the top ten records,then Apache Spark will collect only 10 records from the filter instead of fetching all records and displaying 10 records as the answer. This will save you time as well as resources.

Different Industries using Apache Spark

Today, many companies are using this tool for business intelligence and advanced analytics. For example, Yahoo is using it to provide personalized content to its visitors. On the other hand, Nickelodeon and MTV are using Apache Spark for its real-time big data analytics to enhance their customer experience.

The other industries also use it for different purposes like:-

- In the financial sector, banks utilized Apache Spark for providing financial services to recommend new financial products to their users. It helps banks to monitor the clients’ activities and identify their key issues. Apache Spark is also used for analyzing and accessing the social media profiles, email, and call recordings of users. Investment banking uses it for analyzing stock prices for future trends.

- In the healthcare industry, it is used for analyzing patients’ records with their past clinical data to get a clear picture of their health problems.

The retail industry uses Apache Spark features to attract customers by providing personalized services. - In the manufacturing industry, they are using it to eliminate the downtime of internet-connected devices by recommending when preventative maintenance should be performed.

Apart from this, there are many business verticals such as entertainment, media, travel, and e-commerce which are using Apache Spark for analyzing the huge amounts of data for making the right decisions.

All these factors show that Apache Spark works well for companies in improving their business development and providing outstanding customer service.

Sum Up

Apache Spark is the next-gen technology for real-time and big data processing. No doubt, many companies are shifting to Apache Spark because of its outstanding efficiency and performance. Apache Spark can prove a game-changer for boosting and fostering their business growth. If you are also looking for Apache Spark development services to give a new edge to your business, then Ksolves professionals are here to help you.

Ksolves is one of the trusted IT development companies that has received huge applause from their clients for delivering successful projects within the tentative deadlines. Our Apache Spark developers have hands-on experience in the technology and directly work with the clients to accomplish all of their customized needs. We provide a complete package of big data consulting services, including Apache Spark, Apache NiFi, Apache Kafka, and many more.

=================================================================

Frequently Asked Questions (FAQ)

What are the Apache Spark Applications?

Apache Spark is rapidly adopted by different industries, which results in a growing number of unique and diverse spark applications. These applications can be successfully implemented and executed in the real world. Some of the applications include processing streaming data, machine learning, and fog computing.

What are the common uses of Spark?

Some of the common uses include:

For performing large data sets of SQL or ETL batch jobs.

For processing streaming, real-time data through sensors and more.

For analyzing complex sessions

Use of streaming data for triggering a response

Which companies are using Apache Spark?

There are many companies using Apache Spark, including Amazon, Art.com., Databricks, eBay Inc.Alibaba, Taobao, Nokia Solutions and Networks, and many more.

What are the key components of the Apache Spark Ecosystem?

The Apache Spark ecosystem comprises of three main categories, which are:-

Language Support: Spark has the ability to integrate with different languages like Scala, Java, and more into applications and perform analytics.

It supports five core components that include Spark SQL, Spark Core, Spark Streaming, Spark MLlib, and GraphX.

Cluster Management can run on three environments: standalone, YARN and Apache Mesos.

![]()

AUTHOR

Spark

Atul Khanduri, a seasoned Associate Technical Head at Ksolves India Ltd., has 12+ years of expertise in Big Data, Data Engineering, and DevOps. Skilled in Java, Python, Kubernetes, and cloud platforms (AWS, Azure, GCP), he specializes in scalable data solutions and enterprise architectures.

Share with